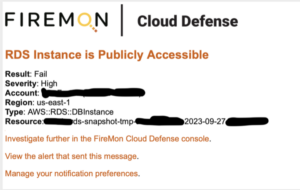

While we may not actively monitor customer accounts for findings and alerts, we recently had a customer reach out to us for a more proactive role in their journey towards automated remediation. At the customer’s request, we were keeping an eye on a few things when… something interesting happened.

Our CTO received an alert indicating that there was an exposed Public RDS instance in AWS. However, when he checked with the client, it wasn’t there anymore. Adding to the strangeness, a public RDS instance was being created every night, only to be terminated 50 minutes later. This kind of activity could easily be missed during timed assessments. Our CTO promptly informed the client and retrieved the metadata on the terminated instance from our inventory. After a thorough (and quick, it only took a few minutes) investigation of the triggering events and instance configuration, he discovered that a public instance was being created nightly based on the latest snapshot backup of a different database. It was then exposed to a small list of known corporate IP addresses (which was good news) before being terminated shortly afterward.

The Investigation

The client conducted their own investigation and found that this was part of an automation process for ETL that ran in the data center. A scheduled job on the cloud side was responsible for creating the ephemeral instance as public, restricting access to a handful of IP addresses (5, which still seemed like a lot), and then the data center would connect to extract the data. We never found out where the actual data transformation happened, but that isn’t overly relevant to the situation.

This presented an interesting challenge for the security team – the alert was valid, but there was no actual security issue at hand (although there are certainly more secure ways to handle this situation than a public RDS instance). Exempting the instance was not an option since a new one was created every night. Exempting the entire account from the check would also be risky, as it could potentially lead to the oversight of a genuinely exposed RDS instance. Even exempting based on tags posed a risk, as someone could easily change the process to expose the instance to an untrusted IP address.

Lessons Learned

My advice was to focus on fixing the underlying process rather than complicating the assessment side. The reality is that this process is not ideal from a procedural standpoint – allowing public RDS instances is never good form. Sometimes you need them, but they should only be a last resort. Instead, they should be placed in a private subnet and accessed through a dedicated or VPN-based connection from wherever you need.

While this didn’t turn out to be a security exposure, there are still some interesting lessons to learn. First, I refer to this as a “false false positive” since the alert was for a real condition that needed attention, but it did not necessarily pose a risk in this particular scenario. There was no actual data leakage, but the client couldn’t know without an investigation and communication with the team responsible for the resource and process.

Second, this is a tough one to try and fully prevent with Service Control Policies. There is no condition key to prevent public RDS instances, nor are there condition keys to prevent the opening of database ports (or any ports) in security groups.

Third, the ephemeral nature of the instances means that unless you operate in real-time or on a very short cycle, you might miss the exposure. I actually cover this topic in my incident response training, as there are many situations where something can be exposed and extracted within a tight timeframe, only to be destroyed later to eliminate evidence. This is why incident responders always need the capability to jump directly into deployments and should have access to an inventory that allows them to look back (such as AWS Config or a third-party tool like ours). API calls alone may not provide sufficient insight into what is happening since they lack context. In this case, you would detect the exposure, but then need to look directly at the DB Instance (or in inventory) to see what ports are exposed from where.

Fourth, due to the limited preventative options available, detective and corrective controls must be utilized. In this case, you can directly detect the CreateDBInstance API call and check for the PubliclyAccessible=True parameter. Additionally, continuous monitoring with CSPM (again, from your CSP or a vendor like us) for Public RDS Instances is highly recommended. In terms of remediation, one option is to terminate the instance upon detection of its creation. However, a better approach may be to use ModifyDBInstance to remove the PubliclyAccessible parameter. If you do this, it’s important to only implement such automation in a deployment where you are certain that public RDS instances will not be allowed. The day you disrupt an expected and authorized database connection that’s been running for 3 years because you failed to communicate with the team is probably a good day to pull out that resume.

Ultimately, this incident did not pose a security risk for the customer. However, it did highlight the need for more secure processes, and they are actively exploring options to handle things in a more secure manner. I find this example particularly interesting because ephemeral data exposures, leaks, and exfiltration are genuine concerns, and what we initially discovered seemed indistinguishable from an actual attack. Only after digging in did we, and the client’s security team, realize it was part of an expected process. It’s crucial to work closely with your teams to cultivate good habits, ensure your monitoring is capable of handling the highly volatile nature of the cloud, and understand that when something unusual like this occurs, it is critical to engage with the individuals responsible for the deployment.

In the cloud, sometimes the only way to differentiate between a false positive and a really bad problem is to check with those directly involved. As I wrote in Schrödinger’s Misconfigurations, attackers utilize the same API calls and, unfortunately, identities, rather than relying on some zero-day vulnerability.

Guest Speaker

Rich Mogull

SVP of Cloud Security, FireMon

Rich is the SVP of Cloud Security at FireMon where he focuses on leading-edge cloud security research and implementation. Rich joined FireMon through the acquisition of DisruptOps, a cloud security automation platform based on his research while as CEO of Securosis. He has over 25 years of security experience and currently specializes in cloud security and DevSecOps, having starting working hands-on in cloud nearly 10 years ago. Prior to founding Securosis and DisruptOps, Rich was a Research Vice President at Gartner on the security team.